Recommended in this issue is DataHub, an open source metadata platform for modern data stacks.

DataHub is a modern data directory designed to support end-to-end data discovery, data observability, and data governance. This scalable metadata platform is built for developers to tame the complexity of their rapidly evolving data ecosystems and allow data practitioners to leverage the full value of data within their organizations.

class=”pgc-h-arrow-right” data-track=”4″>

1 Cross-database, data lake, BI platform, ML function storage, workflow orchestration

Here is an example of searching for assets associated with that term health: We see the results across the Looker dashboard, BigQuery dataset, and DataHub labels and users, and finally navigate to the “DataHub Health” Looker dashboard overview.

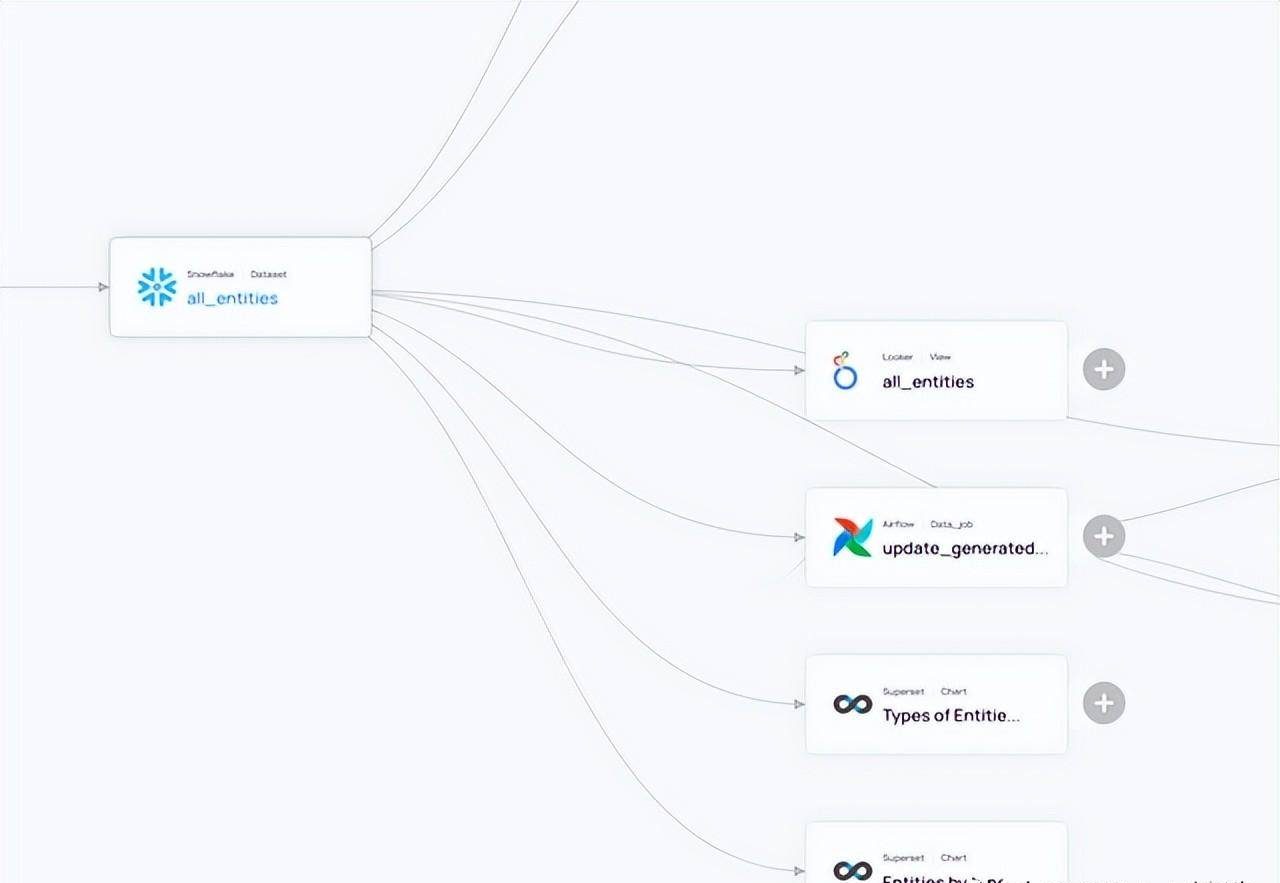

2 Cross platform, dataset, pipeline, chart

Using the lineage view, we can navigate all upstream dependencies of the dashboard, including Looker Charts, Snowflake and s3 data sets, and Airflow Pipelines.

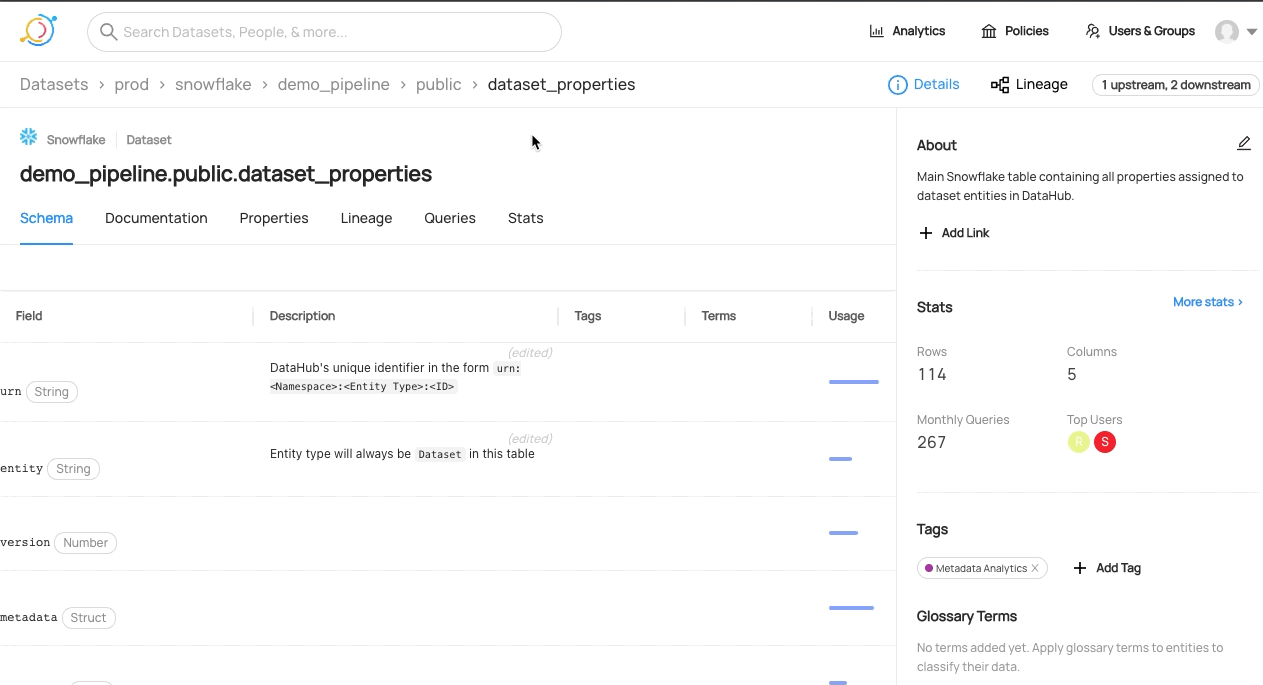

3 data set analysis

DataHub provides data set analysis and usage statistics for popular data warehouse platforms, making it easy for data practitioners to understand the shape of data and how it evolves over time.

4 strong document

DataHub can easily update and maintain documents as definitions and use cases evolve. In addition to managing documents through GMS, DataHub also provides rich documentation and support for external linking through UI.

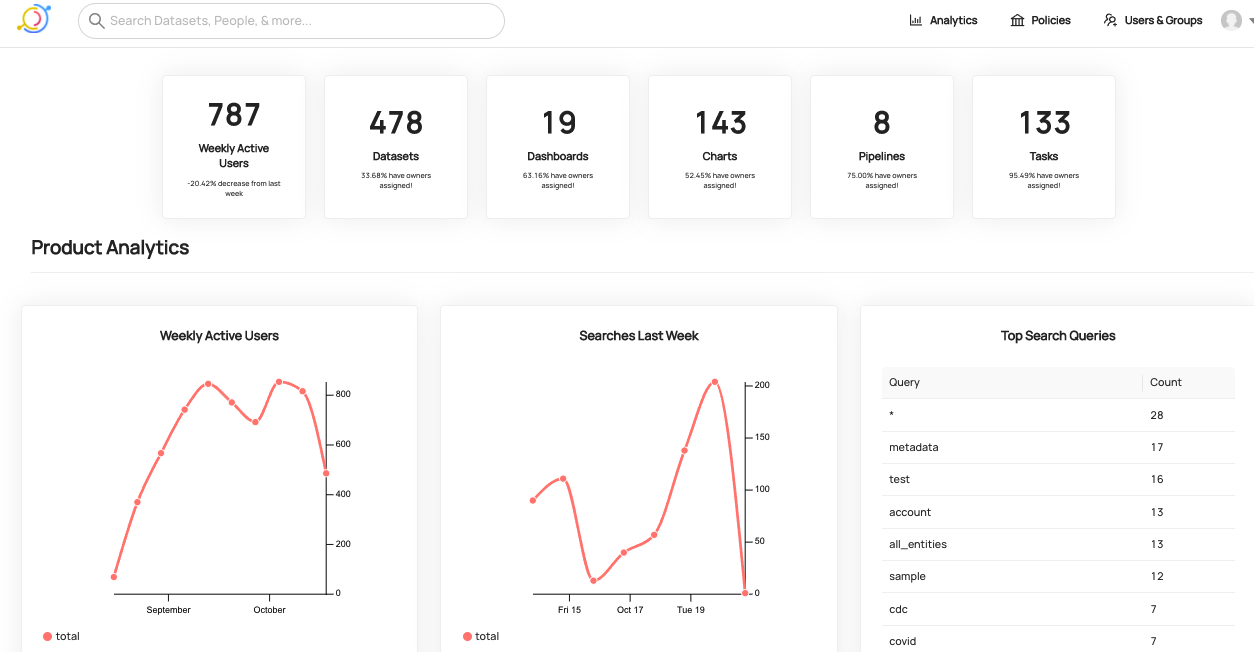

5 Metadata quality and usage

Gain insight into the health of metadata in DataHub and how end users interact with the platform. The analytical view provides a snapshot of the number and percentage of assets, including assigned ownership, weekly active users, and the most common searches and actions.

Install and deploy

1 Install docker, jq, and docker-compose (if using Linux). Ensure that sufficient hardware resources are allocated to the Docker engine. Tested and validated configuration: 2 CPus, 8GB RAM, 2GB swap area, and 10GB disk space.

2 Start the Docker engine from the command line or desktop application.

3 Install the DataHub CLI and run the following command in the terminal:

python3 -m pip install --upgrade pip wheel setuptools

python3 -m pip uninstall datahub acryl-datahub || true # sanity check - ok if it fails

python3 -m pip install --upgrade acryl-datahub

datahub versionIf you see ‘command not found’ try running the cli command with the prefix ‘python3-m’ : python3-m datahub version

4 To deploy DataHub, run the following CLI command from the terminal:

datahub docker quickstart5 To extract sample metadata, run the following CLI command from the terminal:

datahub docker ingest-sample-data6 To clear all the DataHub’s state (for example, before inguring your own state), you can use the CLInuke command:

datahub docker nukeIf you want to delete the container but keep the data, you can –keep-data to add flags to the command. This allows you to run the quickstart command to make DataHub run with the data you previously extracted.

Introduction to metadata ingestion

This module hosts an extensible Python-based metadata ingestion system for DataHub. This enables sending data to DataHub using Kafka or through the REST API. It’s available through our CLI tool, choreographers like Airflow, or as a library.

Before running any metadata ingestion job, you should ensure that the DataHub backend services are running.

The

recipe is a configuration file that tells our ingest script where to extract data from (the source) and where to put it (the sink). This is a simple example that extracts metadata from MSSQL (source) and puts it into datahub rest (sink).

# A sample recipe that pulls metadata from MSSQL and puts it into DataHub

# using the Rest API.

source:

type: mssql

config:

username: sa

password: ${MSSQL_PASSWORD}

database: DemoData

transformers:

- type: "fully-qualified-class-name-of-transformer"

config:

some_property: "some.value"

sink:

type: "datahub-rest"

config:

server: "http://localhost:8080"CLI

pip install 'acryl-datahub[datahub-rest]' # install the required plugin

datahub ingest -c ./examples/recipes/mssql_to_datahub.ymlThe –dry-run option ingest for this command performs all the ingestion steps except the write sink. This helps ensure that the ingestion recipes generate the required units of work before they are ingested into the datahub.

# Dry run

datahub ingest -c ./examples/recipes/example_to_datahub_rest.yml --dry-run

# Short-form

datahub ingest -c ./examples/recipes/example_to_datahub_rest.yml -nThe –preview option ingest of the command performs all ingestion steps, but limits processing to only the first 10 units of work generated by the source. This option facilitates quick end-to-end smoke testing of ingested formulations.

# Preview

datahub ingest -c ./examples/recipes/example_to_datahub_rest.yml --preview

# Preview with dry-run

datahub ingest -c ./examples/recipes/example_to_datahub_rest.yml -n --previewIf you want to modify the data before it arrives at the ingestion sink — for example, adding additional owners or labels — you can use the converter to write your own module and integrate it with DataHub.

class=”pgc-h-arrow-right”>

—END—

Open source: Apache-2.0 License