This issue recommends an image-based sign language recognition system based on Python

hand-keras-yolo3-recognize.

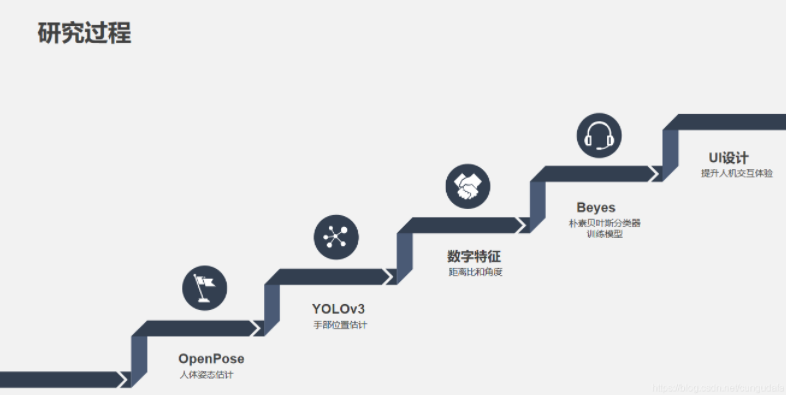

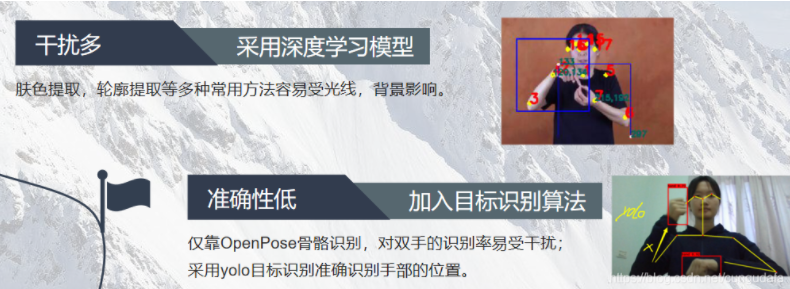

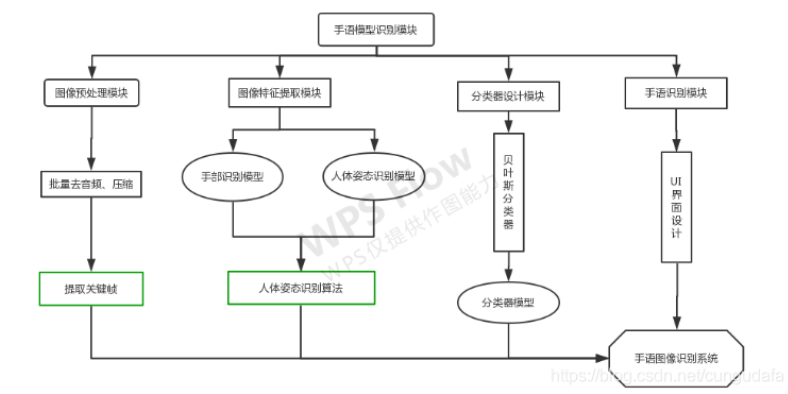

hand-keras-yolo3-recognize a sign language image recognition system based on human gesture studies. Based on the OpenPose open source human pose model and YOLOv3 self-training hand model, the video and image are detected, and then the digital features are predicted by the classifier model, and the prediction results are displayed in text form.

Hardware and software environment:

The sign language image recognition system based on human gesture adopts the method of hardware and software combination. The hardware part is mainly a monocular camera for collecting sign language images. In the software part, the video image is processed by ffmpeg, the Python3.6 development environment is configured under Anaconda, and the OpenPose model is compiled with Cmake. Finally, the image algorithm in OpenCV is combined with VScode compiler. All programs of the sign language image recognition system are compiled, and the main interface of the system is designed through wxFromBuilder framework.

Hardware environment

The main hardware devices used in sign language video image acquisition include laptop camera and mobile phone camera. The detailed parameters of the program running hardware environment are as follows:

(1) Operating system: Windows10 Home Edition, 64bit

(2) GPU: Intel(R) Core(TM) i5-8300H, main frequency: 2.30GHz

(3) Memory: 8G

Software environment

(1) Video processing tool: ffmpeg-20181115

(2) Integrated development environment: Microsoft Visual Studio Code, Anaconda3

(3) Interface design tool: wxFromBuilder

(4) Programming language: python3.6

System function design:

1. Video frame processing

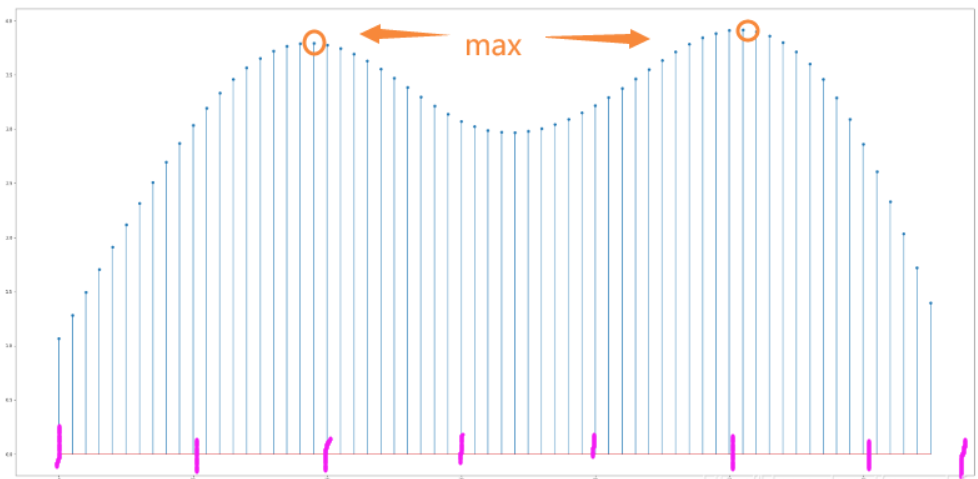

The cv2.imwrite() function in OpenCV is used to save each frame in the video. To process a video, the key frame is extracted using the frame with the local maximum average difference between frames as the local maximum.

More: Python+Opencv2 (III) Save video keyframes _cungudafa’s blog -CSDN blog

2.

OpenPose Human posture recognition

Openpose Human bones, gestures – Still image labeling and classification (with source code) _cungudafa’s blog -CSDN blog _openpose bone point file

Openpose Human bones, gestures – Still image labeling and classification 2 (with source code) _cungudafa’s blog -CSDN blog _openpose action classification

Because only the human posture 4 and 7 key points are not enough to identify the hand position, it is easy to misjudge, so yolo hand recognition is introduced in the final design.

3

.yolov3 hand model training

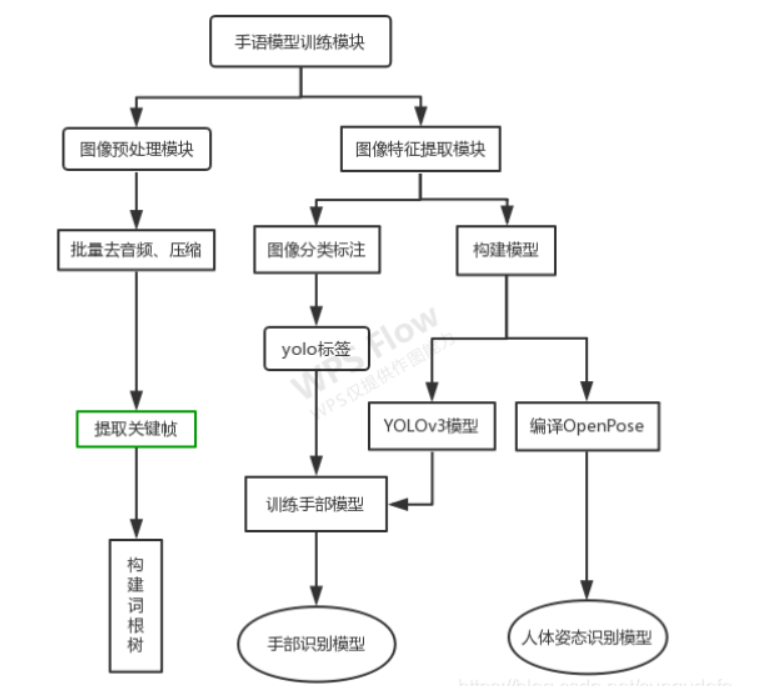

The project structure is mainly divided into two parts: YOLOv3 deep model training part and YOLOv3 and OpenPose sign language gesture recognition part.

Training model idea:

Environment: [GPU] win10 (1050Ti)+anaconda3+python3.6+CUDA10.0+

tensorflow-gpu2.1.0_cungudafa blog -CSDN blogTraining model: [Keras+TensorFlow+Yolo3] article master image annotation, training, recognition (tf2 pit filling) _cungudafa blog -CSDN blog

Identify: 【Keras+TensorFlow+Yolo3】 Teach you how to identify the movie and TV drama model _cungudafa blog -CSDN blog

Model training reference code: https://gitee.com/cungudafa/keras-yolo3

yolo3 identification refers here to:

https://github.com/AaronJny/tf2-keras-yolo3

4. Human posture digital feature extraction

Identify the complete process idea:

In OpenPose design, formulas and methods for solving distance and Angle have been elaborated. Finally, due to individual differences, each person’s bones may be different, and the current optimization is the distance ratio (that is, the ratio of the distance between 3-4 key points of the forearm and 0-1 key points of the neck length))。

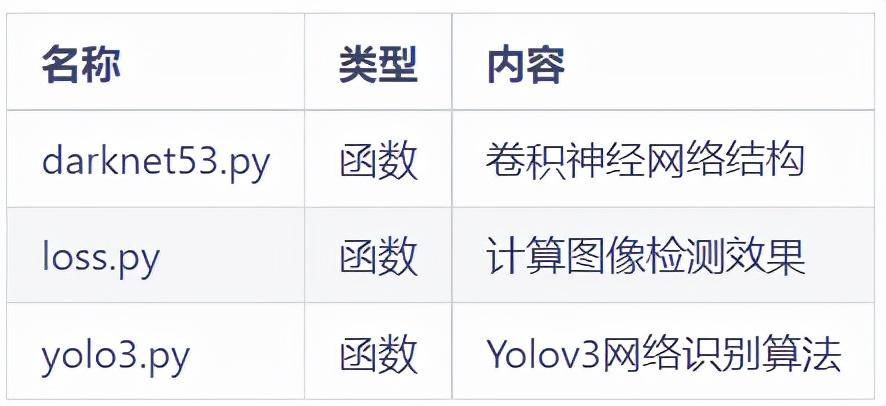

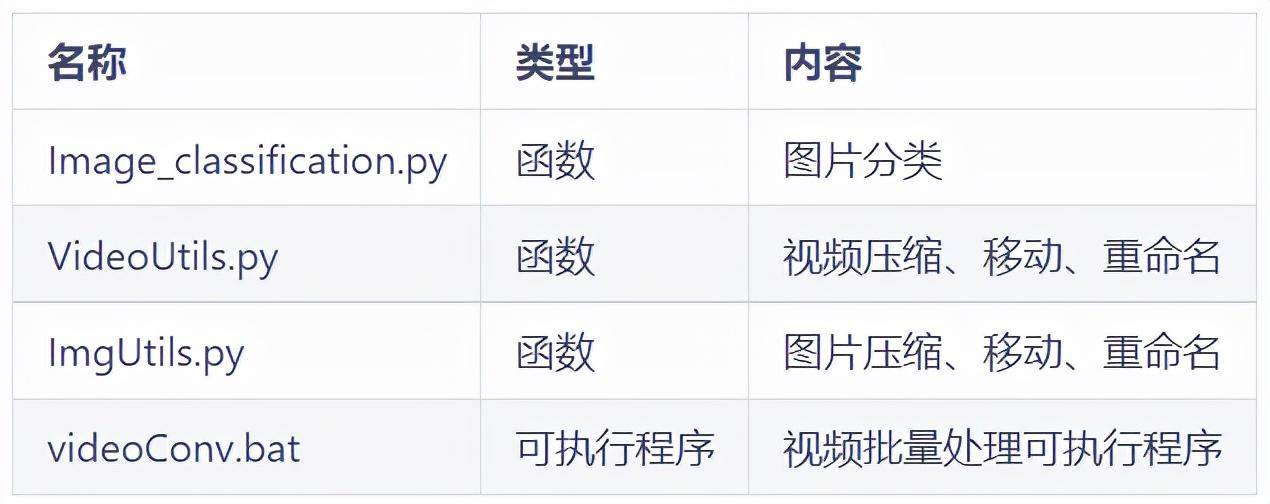

The project structure of KerAS-based yolo3 training is shown in the following table:

keras-yolo3 Training Program Structure:

The logs folder is used to store the trained model, and VOCdevkit is used to store pictures and annotation information.

model_data folder contents:

nets folder contents:

openpose and yolov3 combined reference:

“Sign Language Image Recognition System Design – Human Movement recognition” design and implementation _cungudafa’s blog -CSDN blog _ Sign Language recognition system

5.beyes classification recognition

【Sklearn】 Introductory flower Dataset Experiment – Understanding Naive Bayes Classifiers _cungudafa blog -CSDN blog

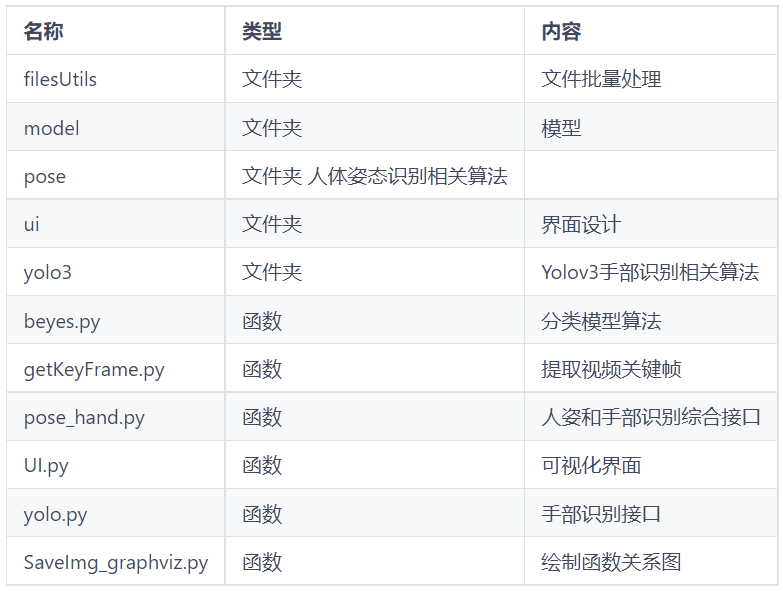

Identify part of the code structure:

Video file handling filesUtils folder:

model Model folder:

Basic algorithm pose and yolov3 folder:

Use:

1. Configure the corresponding environment (docs/requirements.txt)

docs/requirements.txt · cungudafa/hand-keras-yolo3-recognize – Gitee.com

2. Modify the path and run UI_main.py

You can read more on your own.

Open source address: Click download