This issue recommends a Baidu open source sentiment analysis system – Senta.

Senta is an open-source sentiment analysis system by Baidu’s technical team. Sentiment analysis aims to automatically identify and extract subjective information such as tendencies, positions, evaluations and opinions from texts. It includes a variety of tasks, such as sentence level emotion classification, evaluation object level emotion classification, opinion extraction, emotion classification and so on. Sentiment analysis is an important research direction of artificial intelligence and has high academic value. At the same time, sentiment analysis has important applications in consumer decision-making, public opinion analysis, personalized recommendation and other fields.

In order to facilitate R&D personnel and business partners to share the leading emotion analysis technology, Baidu has open source the SKEP-based emotion pre-training code and the Chinese-English emotion pre-training model in Senta. Users only need a few lines of code to realize the SkeP-based emotion pre-training and model prediction function.

Usage

1 Environmental preparation

- PaddlePaddle installation

This project depends on PaddlePaddle 1.6.3. After PaddlePaddle is installed, dynamic library paths such as CUDA, cuDNN, NCCL2 need to be added to environment variable LD_LIBRARY_PATH in time. Otherwise, related library errors will be reported during training.

Detailed installation documentation:

https://www.paddlepaddle.org.cn/install/quick?docurl=/documentation/docs/zh/install/pip/linux-pip.html

pip installation is recommended:

python -m pip install paddlepaddle-gpu==1.6.3.post107-i https://mirror.baidu.com/pypi/simple- senta project python package dependencies

Support for Python 3 version 3.7; The other python package dependencies in the project are listed in the requirements.txt file in the root directory and are installed with the following command:

python -m pip install -r requirements.txt- Environment variable added

Modify the environment variables in./env.sh, including the python, CUDA, cuDNN, NCCL2, PaddlePaddle related environment variables.

PaddlePaddle Environment variable description:

https://www.paddlepaddle.org.cn/documentation/docs/zh/1.6/flags_cn.html < / p >

2 Installation project

Senta warehouse supports both pip installation and source installation. PaddlePaddle needs to be installed before using pip or source installation.

- pip installation

python -m pip install Senta- Source code installation

git clone https://github.com/baidu/Senta.git

cd Senta

python -m pip install .3 Usage Method

from senta import Senta

my_senta = Senta()

# Get the currently supported emotion pre-training model, We open SKEP models initialized with ERNIE 1.0 large(Chinese), ERNIE 2.0 large(English), and RoBERTa large(English)

print(my_senta.get_support_model()) # ["ernie_1.0_skep_large_ch", "ernie_2.0_skep_large_en", "roberta_skep_large_en"]

# Get currently supported prediction tasks

print(my_senta.get_support_task()) # ["sentiment_classify", "aspect_sentiment_classify", "extraction"]

# Select whether to use gpu

use_cuda = True # Set True or False

# Predicting Chinese sentence-level emotion classification task

my_senta.init_model(model_class="ernie_1.0_skep_large_ch", task="sentiment_classify", use_cuda=use_cuda)

texts = [" Sun Yat-sen University is the first institution of Learning in Lingnan "]

result = my_senta.predict(texts)

print(result)

# Predicting emotion classification tasks at the Chinese evaluation object level

my_senta.init_model(model_class="ernie_1.0_skep_large_ch", task="aspect_sentiment_classify", use_cuda=use_cuda)

texts = [" Baidu is a high-tech company "]

aspects = [" Baidu "]

result = my_senta.predict(texts, aspects)

print(result)

# Predict Chinese opinion extraction task

my_senta.init_model(model_class="ernie_1.0_skep_large_ch", task="extraction", use_cuda=use_cuda)

texts = [" Tang Jia Sanshao, real name Zhang Wei. "]

result = my_senta.predict(texts, aspects)

print(result)

# Predicting English sentence-level sentiment classification Tasks (based on SKEP-ERNIE2.0 model)

my_senta.init_model(model_class="ernie_2.0_skep_large_en", task="sentiment_classify", use_cuda=use_cuda)

texts = ["a sometimes tedious film ."]

result = my_senta.predict(texts)

print(result)

# Predicting emotion classification tasks at the English Evaluation object level (based on SKEP-ERNIE2.0 model)

my_senta.init_model(model_class="ernie_2.0_skep_large_en", task="aspect_sentiment_classify", use_cuda=use_cuda)

texts = ["I love the operating system and the preloaded software."]

aspects = ["operating system"]

result = my_senta.predict(texts, aspects)

print(result)

# Predicting English Opinion Extraction Tasks (based on SKEP-ERNIE2.0 model)

my_senta.init_model(model_class="ernie_2.0_skep_large_en", task="extraction", use_cuda=use_cuda)

texts = ["The JCC would be very pleased to welcome your organization as a corporate sponsor ."]

result = my_senta.predict(texts)

print(result)

# Predicting English sentence-level sentiment classification tasks (based on SKEP-RoBERTa model)

my_senta.init_model(model_class="roberta_skep_large_en", task="sentiment_classify", use_cuda=use_cuda)

texts = ["a sometimes tedious film ."]

result = my_senta.predict(texts)

print(result)

# Predicting emotion classification tasks at the English evaluation object level (based on SKEP-RoBERTa model)

my_senta.init_model(model_class="roberta_skep_large_en", task="aspect_sentiment_classify", use_cuda=use_cuda)

texts = ["I love the operating system and the preloaded software."]

aspects = ["operating system"]

result = my_senta.predict(texts, aspects)

print(result)

# Predicting English opinion Extraction Tasks (based on SKEP-RoBERTa model)

my_senta.init_model(model_class="roberta_skep_large_en", task="extraction", use_cuda=use_cuda)

texts = ["The JCC would be very pleased to welcome your organization as a corporate sponsor ."]

result = my_senta.predict(texts)

print(result)Specific effect

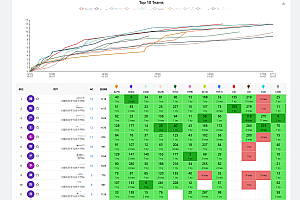

SKEP uses affective knowledge to enhance the pre-training model, and comprehensively outperforms SOTA in 14 typical tasks of Chinese-English affective analysis, with an average improvement of about 2% compared with the original SOTA. The specific results are shown in the table below:

You can read more on your own.