This issue recommends modification and encapsulation of C++ code based on PaddleOCR. NET tool class library.

Project Description

This project is a modified and encapsulated C++ code based on PaddleOCR. NET tool class library. Including text recognition, text detection, statistical analysis based on the text detection results of the table recognition function, at the same time for the small picture recognition is not accurate, do optimization, improve the recognition accuracy.

Contains ultra-lightweight Chinese OCR with a total model of only 8.6M, and the single model supports Chinese and English digit combination recognition, vertical row text recognition, and long text recognition. Supports multiple text detection.

The PaddleOCR.dll file in this project is a C++ dynamic library modified by the C++ code of the open source project PaddleOCR and compiled by x64 of opencv.

All call parameters are introduced

#region General parameter

/// <summary>

/// Whether to use GPU, off by default

/// < /summary>

public byte use_gpu { get; set; } = 0;

/// < summary>

/// GPU id, valid when using GPU

/// < /summary>

public int gpu_id { get; set; } = 0;

/// < summary>

/// Requested GPU memory, valid when using GPU

/// < /summary>

public int gpu_mem { get; set; } = 4000;

/// < summary>

/// Number of threads to use, default 2

/// < /summary>

public int numThread { get; set; } = 2;

/// < summary>

/// Enable mkldnn acceleration, which is enabled by default

/// < /summary>

public byte Enable_mkldnn { get; set; } = 1;

#endregion

#region Detection model correlation

/// < summary>

/// White edge, default 50, not used for now

/// < /summary>

public int Padding { get; set; } = 50;

/// < summary>

/// When the length and width of the input image are greater than 960, the image is scaled in equal proportion, so that the longest edge of the image is 960

/// < /summary>

public int MaxSideLen { get; set; } = 960;

/// < summary>

/// Threshold of DB post processing filter box, if the detection of missing boxes, can be reduced as appropriate

/// < /summary>

public float BoxScoreThresh { get; set; } = 0.5f;

/// < summary>

/// Used to filter binary images for DB predictions. 0.-0.3 has no significant effect on the results

/// < /summary>

public float BoxThresh { get; set; } = 0.3f;

/// < summary>

/// Indicates the compactness of the text box, the smaller the text box is closer to the text

/// < /summary>

public float UnClipRatio { get; set; } = 1.6f;

/// < summary>

/// DoAngle Default 1 Enable

/// < /summary>

public byte DoAngle { get; set; } = 1;

/// < summary>

/// MostAngle Default 1 Enable

/// < /summary>

public byte MostAngle { get; set; } = 1;

/// <summary>

///Whether to use a polygonal box to calculate the bbox score. false indicates that a rectangular box is used to calculate the Bbox score. The rectangle box is faster to calculate, and the polygon box is more accurate to calculate the curved text area.

/// </summary>

public byte use_polygon_score { get; set; } = 0;

/// <summary>

/// Whether to visualize the result. If the value is 1, the ocr_vis.png prediction is saved in the current folder.

/// </summary>

public byte visualize { get; set; } = 0;

#endregion

#regionDirection classifier correlation

/// <summary>

/// Enable direction picker, off by default

/// </summary>

public byte use_angle_cls { get; set; } = 0;

/// <summary>

/// Score threshold of direction classifier

/// </summary>

public float cls_thresh { get; set; } = 0.9f;

#endregionServer-side C++ prediction

Prepare the environment

- Linux environment, docker is recommended.

- Windows environment, currently supports compilation based on Visual Studio 2019 Community.

< Compiling the opencv library

- First, you need to download the package compiled in Linux from the opencv official website, take opencv3.4.7 as an example, and download the command as follows.

cd deploy/cpp_infer

wget https://paddleocr.bj.bcebos.com/libs/opencv/opencv-3.4.7.tar.gz < / span >

tar -xf opencv-3.4.7.tar.gzFinally you will see the opencv-3.4.7/ folder in the current directory.

- Compile opencv, set the opencv source path (root_path) and installation path (install_path). Enter the opencv source path, and compile in the following way.

root_path="your_opencv_root_path"

install_path=${root_path}/opencv3

build_dir=${root_path}/build

rm -rf ${build_dir}

mkdir ${build_dir}

cd ${build_dir}

cmake .. \

-DCMAKE_INSTALL_PREFIX=${install_path} \

-DCMAKE_BUILD_TYPE=Release \

-DBUILD_SHARED_LIBS=OFF \

-DWITH_IPP=OFF \

-DBUILD_IPP_IW=OFF \

-DWITH_LAPACK=OFF \

-DWITH_EIGEN=OFF \

-DCMAKE_INSTALL_LIBDIR=lib64 \

-DWITH_ZLIB=ON \

-DBUILD_ZLIB=ON \

-DWITH_JPEG=ON \

-DBUILD_JPEG=ON \

-DWITH_PNG=ON \

-DBUILD_PNG=ON \

-DWITH_TIFF=ON \

-DBUILD_TIFF=ON

make -j

make installYou can also directly modify the contents of tools/build_opencv.sh and then compile directly by running the following command.

sh tools/build_opencv.shwhere root_path is the path of the downloaded opencv source code and install_path is the path of the opencv installation. After make install is complete, opencv header files and library files will be generated in this folder. Used for subsequent OCR code compilation.

The final file structure in the installation path is as follows.

opencv3/

|-- bin

|-- include

|-- lib

|-- lib64

|-- share< Download or compile Paddle Prediction library

Download and install directly:

https://paddle-inference.readthedocs.io/en/latest/user_guides/download_lib.html

- After downloading it, unzip it using the following method, and eventually a subfolder paddle_inference/ is generated in the current folder.

tar -xf paddle_inference.tgzPredictive library source code compilation

- If you want to obtain the latest prediction library features, you can clone the latest code from Paddle github and compile the prediction library from source.

git clone https://github.com/PaddlePaddle/Paddle.git

git checkout release/2.2- After entering the Paddle directory, compile as follows.

rm -rf build

mkdir build

cd build

cmake .. \

-DWITH_CONTRIB=OFF \

-DWITH_MKL=ON \

-DWITH_MKLDNN=ON \

-DWITH_TESTING=OFF \

-DCMAKE_BUILD_TYPE=Release \

-DWITH_INFERENCE_API_TEST=OFF \

-DON_INFER=ON \

-DWITH_PYTHON=ON

make -j

make inference_lib_dist- After compiling, you can see the following files and folders generated under the build/paddle_inference_install_dir/ file.

build/paddle_inference_install_dir/

|-- CMakeCache.txt

|-- paddle

|-- third_party

|-- version.txtwhere paddle is the Paddle library required for C++ prediction, and version.txt contains the version information of the current prediction library.

Start

inference model

inference/

|-- det_db

| |--inference.pdiparams

| |--inference.pdmodel

|-- rec_rcnn

| |--inference.pdiparams

| |--inference.pdmodel编译PaddleOCR C++预测demo

- The compilation command is as follows, where the addresses of Paddle C++ prediction Library, opencv and other dependent libraries need to be replaced with the actual addresses on your machine.

sh tools/build.sh- Modify the environment path in tools/build.sh as follows:

OPENCV_DIR=your_opencv_dir

LIB_DIR=your_paddle_inference_dir

CUDA_LIB_DIR=your_cuda_lib_dir

CUDNN_LIB_DIR=/your_cudnn_lib_dirOPENCV_DIR indicates the address of compiling and installing opencv. LIB_DIR is the Paddle prediction library address generated by download (paddle_inference folder) or compilation (paddle_inference folder).

build/paddle_inference_install_dir folder); CUDA_LIB_DIR is the address of the cuda library file, which is /usr/local/cuda/lib64 in docker. CUDNN_LIB_DIR indicates the cudnn library file address, which is displayed in docker

/usr/lib/x86_64-linux-gnu/. Note: The above paths are written absolute paths, do not write relative paths.

- After compiling, an executable file named ppocr is generated in the build folder.

Run demo

Operation mode:

./build/ppocr < mode> [--param1] [--param2] [...] Call detection only:

./build/ppocr det \

--det_model_dir=inference/ch_ppocr_mobile_v2.0_det_infer \

--image_dir=../../doc/imgs/12.jpgOnly call identification:

./build/ppocr rec \

--rec_model_dir=inference/ch_ppocr_mobile_v2.0_rec_infer \

--image_dir=../../doc/imgs_words/ch/Call concatenation:

# Direction classifiers are not used

./build/ppocr system \

--det_model_dir=inference/ch_ppocr_mobile_v2.0_det_infer \

--rec_model_dir=inference/ch_ppocr_mobile_v2.0_rec_infer \

--image_dir=../../doc/imgs/12.jpg

# Use a direction classifier

./build/ppocr system \

--det_model_dir=inference/ch_ppocr_mobile_v2.0_det_infer \

--use_angle_cls=true \

--cls_model_dir=inference/ch_ppocr_mobile_v2.0_cls_infer \

--rec_model_dir=inference/ch_ppocr_mobile_v2.0_rec_infer \

--image_dir=../../doc/imgs/12.jpgThe final detection result will be displayed on the screen as follows:

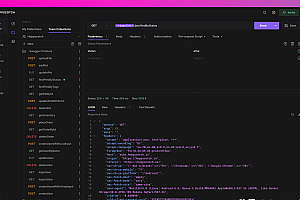

#.net Example

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

if (ofd.ShowDialog() != DialogResult.OK) return;

var imagebyte = File.ReadAllBytes(ofd.FileName);

Bitmap bitmap = new Bitmap(new MemoryStream(imagebyte));

OCRModelConfig config = null;

OCRParameter oCRParameter = new OCRParameter ();

//oCRParameter.use_gpu=1; When using the GPU version of the predictive library, this parameter is only effective when turned on

OCRResult ocrResult = new OCRResult();

using (PaddleOCREngine engine = new PaddleOCREngine(config, oCRParameter))

{

ocrResult = engine.DetectText(bitmap );

}

if (ocrResult != null)

{

MessageBox.Show(ocrResult.Text," Identification result ");

}