This issue recommends StreamX, a one-stop big data framework that makes Flink&Spark development easier.

StreamX is a one-stop big data framework that makes Apache Spark and Apache Flink development easier. StreamX standardizes project configuration, encourages functional programming, defines the best programming methods, and provides a series of out of the box connectors that standardize the entire process of configuration, development, testing, deployment, monitoring, and operations. Developers only need to focus on the core business, greatly reducing learning costs and development barriers.

Main characteristics

- Provide a range of out of the box connectors

- Support project compilation function (Maven compilation)

- Support Applicaion mode and Yarn Per Job mode startup

- Quick daily operations (task start, stop, savepoint, restore from savepoint)

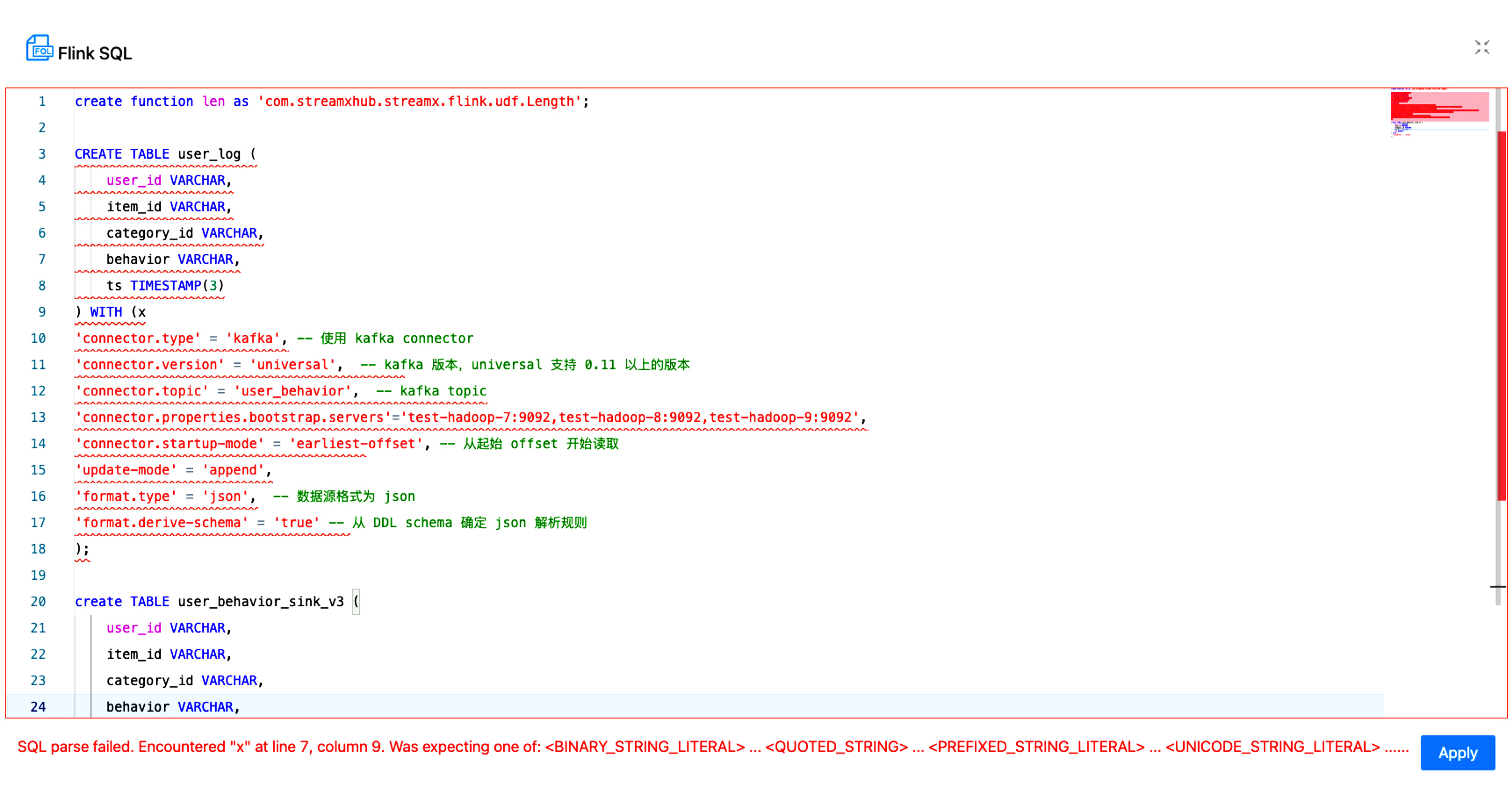

- Support notebook (online task development)

- Support task backup and rollback (configure rollback)

- Provide two sets of APIs, Scala and Java

Function Architecture

Streamx consists of three parts, namely streamx core, streamx pump, and streamx console.

streamx-core:

Streamx core positioning is a development time framework that provides a series of out of the box connectors, extends DataStream related methods, and integrates DataStream and Flink SQL APIs.

streamx-pump:

Streamx pump localization is a data extraction component, similar to Flinkx, developed based on various connectors provided in streamx core.

streamx-console:

Streamx console is a comprehensive real-time data platform and low code platform that can effectively manage Flink tasks. It integrates project compilation, publishing, parameter configuration, startup, savepoint, flame graph, Flink SQL, monitoring, and many other functions into one, greatly simplifying the daily operation and maintenance of Flink tasks.

Installation and deployment

1 Environmental preparation

Streamx console provides an out of the box installation package, and there are some requirements for the environment before installation. The specific requirements are as follows:

At present, StreamX releases tasks for Flink and supports both Flink on YARN and Flink on Kubernetes modes.

- Hadoop

To use Flink on YARN, the cluster that needs to be deployed needs to install and configure relevant environment variables for Hadoop. If you are installing a Hadoop environment based on CDH, the relevant environment variables can be configured as follows:

export HADOOP_HOME=/opt/cloudera/parcels/CDH/lib/hadoop #hadoop 安装目录

export HADOOP_CONF_DIR=/etc/hadoop/conf

export HIVE_HOME=$HADOOP_HOME/../hive

export HBASE_HOME=$HADOOP_HOME/../hbase

export HADOOP_HDFS_HOME=$HADOOP_HOME/../hadoop-hdfs

export HADOOP_MAPRED_HOME=$HADOOP_HOME/../hadoop-mapreduce

export HADOOP_YARN_HOME=$HADOOP_HOME/../hadoop-yarn- Kubernetes

To use Flink on Kubernetes, additional deployment/or use of existing Kubernetes clusters is required. Please refer to Flink K8s integration support:

http://www.streamxhub.com/docs/flink-k8s/k8s-dev

2 Compile Installation

You can choose to manually compile and install, or directly download the compiled installation package. The steps for manual compilation and installation are as follows:

git clone https://github.com/streamxhub/streamx.git

cd Streamx

mvn clean install -DskipTests -Denv=prodIf everything goes smoothly, you can see the compilation success message:

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for Streamx 1.0.0:

[INFO]

[INFO] Streamx ............................................ SUCCESS [ 1.882 s]

[INFO] Streamx : Common ................................... SUCCESS [ 15.700 s]

[INFO] Streamx : Flink Parent ............................. SUCCESS [ 0.032 s]

[INFO] Streamx : Flink Common ............................. SUCCESS [ 8.243 s]

[INFO] Streamx : Flink Core ............................... SUCCESS [ 17.332 s]

[INFO] Streamx : Flink Test ............................... SUCCESS [ 42.742 s]

[INFO] Streamx : Spark Parent ............................. SUCCESS [ 0.018 s]

[INFO] Streamx : Spark Core ............................... SUCCESS [ 12.028 s]

[INFO] Streamx : Spark Test ............................... SUCCESS [ 5.828 s]

[INFO] Streamx : Spark Cli ................................ SUCCESS [ 0.016 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESSAfter installation, you will see the final project file located at

streamx/streamx-console/streamx-console-service/target/streamx-console-service-1.0.0-bin.tar.gz ,After unpacking, the installation directory is as follows:

.

streamx-console-service-1.0.0

├── bin

│ ├── flame-graph

│ ├── └── *.py //火焰图相关功能脚本 ( 内部使用,用户无需关注 )

│ ├── startup.sh //启动脚本

│ ├── setclasspath.sh //java 环境变量相关的脚本 ( 内部使用,用户无需关注 )

│ ├── shutdown.sh //停止脚本

│ ├── yaml.sh //内部使用解析 yaml 参数的脚本 ( 内部使用,用户无需关注 )

├── conf

│ ├── application.yaml //项目的配置文件 ( 注意不要改动名称 )

│ ├── application-prod.yml //项目的配置文件 ( 开发者部署需要改动的文件,注意不要改动名称 )

│ ├── flink-application.template //flink 配置模板 ( 内部使用,用户无需关注 )

│ ├── logback-spring.xml //logback

│ └── ...

├── lib

│ └── *.jar //项目的 jar 包

├── plugins

│ ├── streamx-jvm-profiler-1.0.0.jar //jvm-profiler,火焰图相关功能 ( 内部使用,用户无需关注 )

│ └── streamx-flink-sqlclient-1.0.0.jar //Flink SQl 提交相关功能 ( 内部使用,用户无需关注 )

├── logs //程序 log 目录

├── temp //内部使用到的零时路径,不要删除3 Modify database configuration

The installation and unpacking have been completed. Next, prepare the data related work:

- Create a new database streamx in MySQL that the deployment machine can connect to

Modify the connection information into conf, modify conf/application-prod.yml, find the ‘data source’ option, locate the MySQL configuration, and modify it to the corresponding information, as follows:

datasource:

dynamic:

# Should SQL log output be enabled? It is recommended to disable it in production environments as it may cause performance degradation

p6spy: false

hikari:

connection-timeout: 30000

max-lifetime: 1800000

max-pool-size: 15

min-idle: 5

connection-test-query: select 1

pool-name: HikariCP-DS-POOL

# Configure default data source

primary: primary

datasource:

# Data Source-1, named Primary

primary:

username: $user

password: $password

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc: mysql://$host:$port/streamx?useUnicode=true&characterEncoding=UTF-8&useJDBCCompliantTimezoneShift=true&useLegacyDatetimeCode=false&serverTimezone=GMT%2B8Tip: During the installation process, there is no need to manually initialize data. Simply set the database information, and a series of operations such as table creation and data initialization will be automatically completed

4 startup project

Enter bin and directly execute startup.sh to start the project. The default port is 10000:

cd streamx-console-service-1.0.0/bin

bash startup.shThe relevant logs will be output to

streamx-console-service-1.0.0/logs/streamx.out 里。

Open the browser and enter http://$host: 10000 to log in. The login interface is as follows (default password: admin/streamx):

5 Modify system configuration

After entering the system, the first thing to do is to modify the system configuration. Under the menu/StreamX/Setting, the operation interface is as follows:

The main configuration items are divided into the following categories:

Flink Home:

Configure the global Flink Home here, which is the only place where the system specifies the Flink environment and will apply to all jobs.

Maven Home:

Specify Maven Home, currently not supported, to be implemented in the next version.

StreamX Env:

StreamX Webapp address: This is where the web URL access address for StreamX Console is configured. The main flame map function will be used, and specific tasks will send HTTP requests to the system through the URL exposed here for collection and display.

StreamX Console Workspace: Configure the system’s workspace for storing project source code, compiled projects, etc.

Email:

The configuration related to Alert Email is to configure the sender’s email information. Please refer to the relevant email information and documentation for specific configuration.

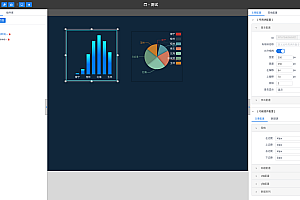

System screenshot

You can read more content on your own.