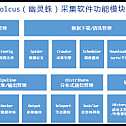

Frame features

Provide users with a certain Go or JS programming foundation to provide heavyweight crawler tools that only need to pay attention to rule customization and complete functions;

It supports three operation modes: stand-alone, server-side and client-side;

GUI (Windows), Web, Cmd three kinds of operation interfaces, can be opened by parameter control;

Support state control, such as pause, resume, stop, etc.;

The amount of collection can be controlled;

The number of concurrent coroutines can be controlled;

Support concurrent execution of multiple collection tasks;

Support proxy IP list, which can control the frequency of replacement;

Support random pause in the collection process to simulate manual behavior;

Custom configuration input interfaces are provided based on rule requirements

There are five output methods: mysql, mongodb, kafka, csv, excel, and original file download;

Support batch output, and the quantity of each batch is controllable;

It supports static Go and dynamic JS collection rules, horizontal and vertical capture modes, and has a large number of demos.

Persistent success record for automatic deduplication;

Serialization failure requests, support deserialization automatic overload processing;

It adopts surfer high-concurrency downloader, supports GET/POST/HEAD method and http/https protocol, and supports two modes of fixed UserAgent automatic saving cookies and random large number of UserAgent disabling cookies, which highly simulates browser behavior and can realize functions such as simulated login.

The server/client mode adopts the Teleport high-concurrency SocketAPI framework, full-duplex connection communication, and the internal data transmission format is JSON.

The above is about some content about Pholcus (ghost spider), there are detailed installation and use tutorials on the official website, and students who want to learn about crawlers can pay attention to it.