In this issue, we recommend a facial expression recognition system – FacialExpressionRecognition.

CICC’s research report pointed out that face recognition technology is the core technology of VR social metaverse.

FacialExpressionRecognition is the source code of the facial expression recognition system. The project uses a classical convolutional neural network, and the construction of the model mainly refers to several papers in CVPR in 2018 and the design of Google’s Going Deeper to identify the facial expression features of people, such as happy, sad, angry, neutral, etc. On the basis of trying traditional face feature extraction methods such as Gabor and LBP, the deep model has a remarkable effect. At present, the model evaluation is carried out on three expression recognition datasets: FER2013, JAFFE and CK+.

Environment deployment

Based on Python3 and Keras2 (TensorFlow backend), the specific dependencies are installed as follows (conda virtual environment is recommended):

git clone https://github.com/luanshiyinyang/FacialExpressionRecognition.git

cd FacialExpressionRecognition

conda create -n FER python=3.6 -y

conda activate FER

conda install cudatoolkit=10.1 -y

conda install cudnn=7.6.5 -y

pip install -r requirements.txt

If you are a Linux user, you can directly execute env.sh in the root directory to configure the environment with one click, and execute the command bash env.sh.

Data preparation

The dataset and pre-trained model have been uploaded to Baidu Netdisk:

https://pan.baidu.com/share/init?surl=LFu52XTMBdsTSQjMIPYWnw, the extraction password is 2pmd.

After downloading, move the model.zip to the models folder in the root directory and extract it to get a *.h5 model parameter file, move the data.zip to the dataset folder in the root directory and extract it to get a compressed file containing multiple datasets, and decompress them to get the dataset containing images (where the rar suffix is the original jaffe dataset, which is recommended to use the one I processed).

Project Description

Traditional methods

Data preprocessing: image noise reduction, face detection (HAAR classifier detection (opencv))

Feature Engineering: Face Feature Extraction (LBP, Gabor)

Classifier: SVM

In-depth approach

Face detection: HAAR classifier, MTCNN (better)

Convolutional neural network: used for feature extraction + classification

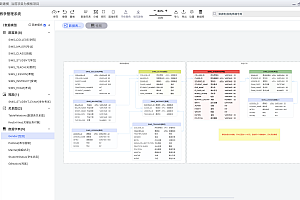

Network design

Using the classical convolutional neural network, the construction of the model mainly refers to several CVPR papers in 2018 and Google’s Going Deeper to design the following network structure, adding (1,1) convolutional layer after the input layer to increase the nonlinear representation, and the model level is shallow, and the parameters are few (a large number of parameters are concentrated in the fully connected layer).

Model training

It is mainly trained on FER2013, JAFFE, and CK+, and JAFFE gives a bust map, so face detection is done. Finally, on the FER2013, both the Pub Test and the Pri Test achieved an accuracy of about 67% (the crawler collection of the dataset had problems such as label errors, watermarks, and animated pictures), and the cross-validation of JAFFE and CK+5 reached about 99% (these two datasets were collected by the laboratory and were more accurate and standard).

Executing the following command will train the specified round on the specified dataset (FER2013 or JAFFE or CK+) with the specified batch_size. The training will generate a corresponding visual training process, and the following figure shows a common plot of the training process on the three datasets.

python src/train.py --dataset fer2013 --epochs 300 --batch_size 32

Model application

Compared with the traditional method, the convolutional neural network performs better, and the model is used to build a recognition system, providing a GUI interface and real-time detection by the camera (the camera must ensure that the fill light is sufficient). During the prediction, a picture is horizontally flipped, deflected by 15 degrees, translated, etc., to obtain multiple probability distributions, and these probability distributions are weighted and summed to obtain the final probability distribution, and the largest probability is used as a label (that is, inference data augmentation is used).

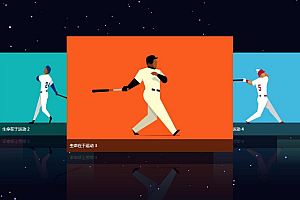

GUI interface

Note: The GUI prediction only shows the face that is most likely to be the face, but the prediction result will be framed and marked on the image for all detected faces, and the tagged image will be in the output directory.

Execute the following command to open the GUI program, which relies on the PyQT design, and the test effect is shown below on a test image (from the Internet).

python src/gui.py

At the same time as the GUI feedback in the above figure, it will detect and recognize the face of each person on the picture, and the following figure will be processed.

Real-time detection

Real-time detection is designed based on Opencv and is designed to use cameras to predict real-time video streams, taking into account some people’s feedback, and modifying the command line parameters when no cameras want to test with video.

Use the following command to turn on the camera for real-time detection (ESC key exit), and to specify video for detection, use the second command below.

python src/recognition_camera.py

python src/recognition_camera.py –source 1 –video_path The absolute path of the video or relative to the root of the project

The following image shows the recognition results on a video that is dynamically demonstrated.

The project uses the GPL3.0 open source license, and you can read more content by yourself.