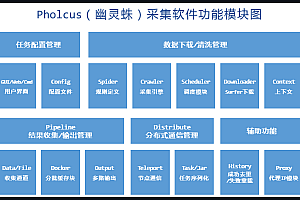

GO based on the development of open source API testing tools, support HTTP/WebSocket/RPC and other protocols

HttpRunner is an open source API testing tool that supports HTTP(S)/HTTP2 / WebSocket/RPC and other network protocols, covering interface testing, performance testing, digital experience monitoring and other test types. Simple to use, powerful, with a rich plug-in mechanism and a high degree of scalability.

Functional characteristics

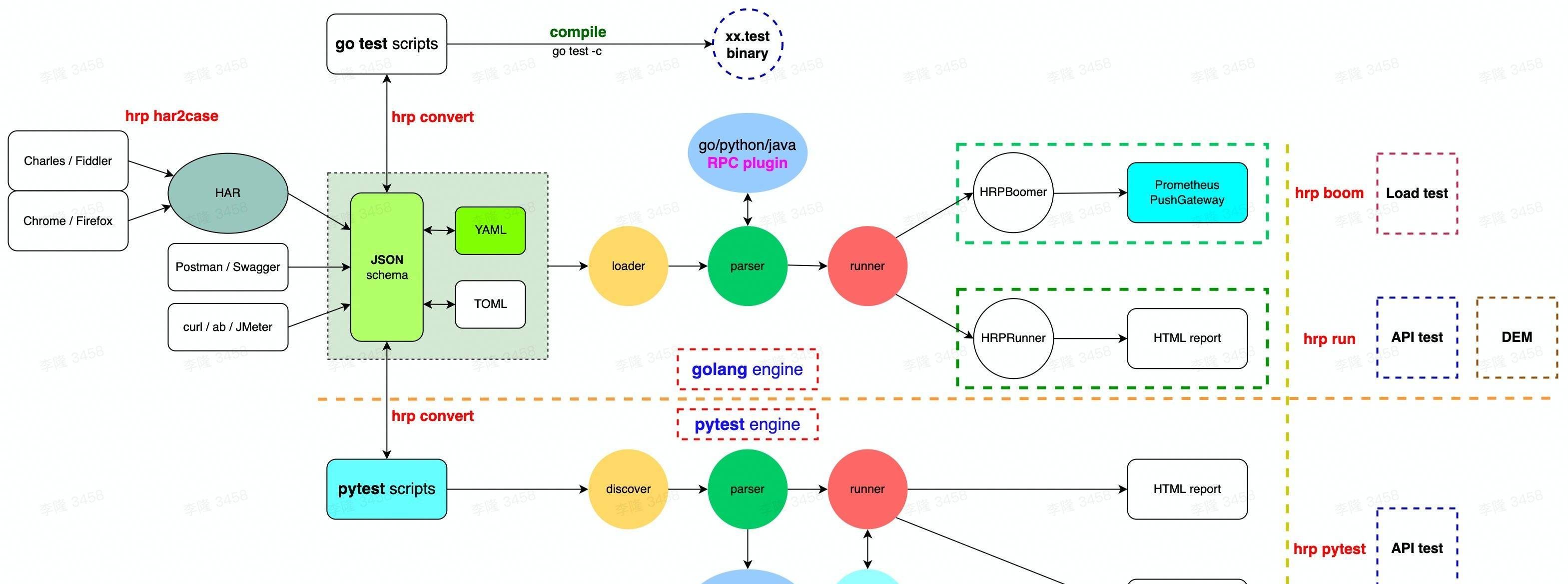

- Network protocol: Full support for HTTP(S)/HTTP2/WebSocket, extensible support for TCP/UDP/RPC and other protocols

- Multi-format optional: The test case supports YAML/JSON/go test/pytest format, and supports format conversion

- Dual execution engine: Support golang/python two execution engines, both the high performance of go and the rich ecology of pytest

- Recording & Generation: Use HAR/Postman/Swagger/curl to generate test cases; Method prompts based on chain calls can also be used to quickly write test cases

- Complex scene: based on the variables/extract/validate/hooks mechanism can easily create arbitrarily complex test scenarios

- Plug-in mechanism: Built-in rich function library, while you can write custom functions based on mainstream programming languages (go/python/java) to easily achieve more capabilities

- Performance testing: Stress testing can be implemented without additional work; Single machine can easily support 1w+ VUM, combined with distributed load capacity to achieve massive voltage generation

- Network performance collection: On the basis of scenario-based interface testing, additional network link performance indicators (DNS resolution, TCP connection, SSL handshake, network transmission, etc.) can be collected.

- One-click deployment: The binary command line tool is used to quickly install and deploy macOS, Linux, or Windows devices without environment dependence

Installation and deployment

Developed by Golang, HttpRunner has pre-compiled binary files for mainstream operating systems, and can be installed and deployed by executing a command in the system terminal.

$ bash -c "$(curl -ksSL https://httprunner.com/script/install.sh)"After the command succeeds, you will get an hrp command line tool, run hrp -h to view the parameter help description.

$ hrp -h

██╗ ██╗████████╗████████╗██████╗ ██████╗ ██╗ ██╗███╗ ██╗███╗ ██╗███████╗██████╗

██║ ██║╚══██╔══╝╚══██╔══╝██╔══██╗██╔══██╗██║ ██║████╗ ██║████╗ ██║██╔════╝██╔══██╗

███████║ ██║ ██║ ██████╔╝██████╔╝██║ ██║██╔██╗ ██║██╔██╗ ██║█████╗ ██████╔╝

██╔══██║ ██║ ██║ ██╔═══╝ ██╔══██╗██║ ██║██║╚██╗██║██║╚██╗██║██╔══╝ ██╔══██╗

██║ ██║ ██║ ██║ ██║ ██║ ██║╚██████╔╝██║ ╚████║██║ ╚████║███████╗██║ ██║

╚═╝ ╚═╝ ╚═╝ ╚═╝ ╚═╝ ╚═╝ ╚═╝ ╚═════╝ ╚═╝ ╚═══╝╚═╝ ╚═══╝╚══════╝╚═╝ ╚═╝

HttpRunner is an open source API testing tool that supports HTTP(S)/HTTP2/WebSocket/RPC

network protocols, covering API testing, performance testing and digital experience

monitoring (DEM) test types. Enjoy!

License: Apache-2.0

Website: https://httprunner.com

Github: https://github.com/httprunner/httprunner

Copyright 2017 debugtalk

Usage:

hrp [command]

Available Commands:

boom run load test with boomer

completion generate the autocompletion script for the specified shell

convert convert JSON/YAML testcases to pytest/gotest scripts

har2case convert HAR to json/yaml testcase files

help Help about any command

pytest run API test with pytest

run run API test with go engine

startproject create a scaffold project

Flags:

-h, --help help for hrp

--log-json set log to json format

-l, --log-level string set log level (default "INFO")

-v, --version version for hrp

Use "hrp [command] --help" for more information about a command.Scaffold creation project

HttpRunner supports the use of scaffolding to create sample projects.

Run the hrp startproject command to initialize a project with a specified name.

$ hrp startproject demo

10:13PM INF Set log to color console other than JSON format.

10:13PM ??? Set log level

10:13PM INF create new scaffold project force=false pluginType=py projectName=demo

10:13PM INF create folder path=demo

10:13PM INF create folder path=demo/har

10:13PM INF create file path=demo/har/.keep

10:13PM INF create folder path=demo/testcases

10:13PM INF create folder path=demo/reports

10:13PM INF create file path=demo/reports/.keep

10:13PM INF create file path=demo/.gitignore

10:13PM INF create file path=demo/.env

10:13PM INF create file path=demo/testcases/demo_with_funplugin.json

10:13PM INF create file path=demo/testcases/demo_requests.yml

10:13PM INF create file path=demo/testcases/demo_ref_testcase.yml

10:13PM INF start to create hashicorp python plugin

10:13PM INF create file path=demo/debugtalk.py

10:13PM INF ensure python3 venv packages=["funppy==v0.4.3"] python=/Users/debugtalk/.hrp/venv/bin/python

10:13PM INF python package is ready name=funppy version=0.4.3

10:13PM INF create scaffold success projectName=demoThe following is the directory structure of the project project. The testcases folder contains several sample testcases.

$ tree demo -a

demo

├── .env

├── .gitignore

├── debugtalk.py

├── har

│ └── .keep

├── reports

│ └── .keep

└── testcases

├── demo_ref_testcase.yml

├── demo_requests.yml

└── demo_with_funplugin.json

3 directories, 8 filesTest case

Let’s take demo_requests.yml as an example to give an initial preview of HttpRunner’s test case structure.

config:

name: "request methods testcase with functions"

variables:

foo1: config_bar1

foo2: config_bar2

expect_foo1: config_bar1

expect_foo2: config_bar2

base_url: "https://postman-echo.com"

verify: False

export: ["foo3"]

teststeps:

-

name: get with params

variables:

foo1: bar11

foo2: bar21

sum_v: "${sum_two(1, 2)}"

request:

method: GET

url: /get

params:

foo1: $foo1

foo2: $foo2

sum_v: $sum_v

headers:

User-Agent: HttpRunner/${get_httprunner_version()}

extract:

foo3: "body.args.foo2"

validate:

- eq: ["status_code", 200]

- eq: ["body.args.foo1", "bar11"]

- eq: ["body.args.sum_v", "3"]

- eq: ["body.args.foo2", "bar21"]

-

name: post raw text

variables:

foo1: "bar12"

foo3: "bar32"

request:

method: POST

url: /post

headers:

User-Agent: HttpRunner/${get_httprunner_version()}

Content-Type: "text/plain"

data: "This is expected to be sent back as part of response body: $foo1-$foo2-$foo3."

validate:

- eq: ["status_code", 200]

- eq: ["body.data", "This is expected to be sent back as part of response body: bar12-$expect_foo2-bar32."]

-

name: post form data

variables:

foo2: bar23

request:

method: POST

url: /post

headers:

User-Agent: HttpRunner/${get_httprunner_version()}

Content-Type: "application/x-www-form-urlencoded"

data: "foo1=$foo1&foo2=$foo2&foo3=$foo3"

validate:

- eq: ["status_code", 200]

- eq: ["body.form.foo1", "$expect_foo1"]

- eq: ["body.form.foo2", "bar23"]

- eq: ["body.form.foo3", "bar21"]The HttpRunner test case has and only two parts:

- config: specifies the common configuration of the test case, including the use case name, base_url, parameterized data source, and whether SSL verification is enabled

- eststeps: a set of ordered steps; Adopt the go interface design concept, support for any protocol and test type extension (even including UI automation)

In the above case, each step is an HTTP request; As you can see, the description contains only the core elements of the HTTP request and result verification, without any cumbersome content.

At the same time, it is important to note that while the above use case is YAML text, it also supports referring to variables and calling functions.

- Variable reference: The convention refers to variables in the form of ${} or $, such as $foo1 or ${foo1}

- Function call: The convention calls plug-in functions in the form of ${}, such as ${sum_two(1, 2)}

The declaration of variables is defined in the step or config variables and follows priority requirements.

The function declaration is defined in the project root directory debugtalk.py, based on the “convention over configuration” design philosophy, we do not need to configure in the test case.

import funppy

def get_httprunner_version():

return "v4.0.0-alpha"

def sum_two_int(a: int, b: int) -> int:

return a + b

if __name__ == '__main__':

funppy.register("get_httprunner_version", get_httprunner_version)

funppy.register("sum_two", sum_two_int)

funppy.serve()In debugtalk.py, we can write functions that implement any custom logic, just register and serve() through funppy.

Run interface test

After the test case is ready, run the hrp run command to execute the specified test case. To generate HTML test reports, attach the –gen-html-report parameter.

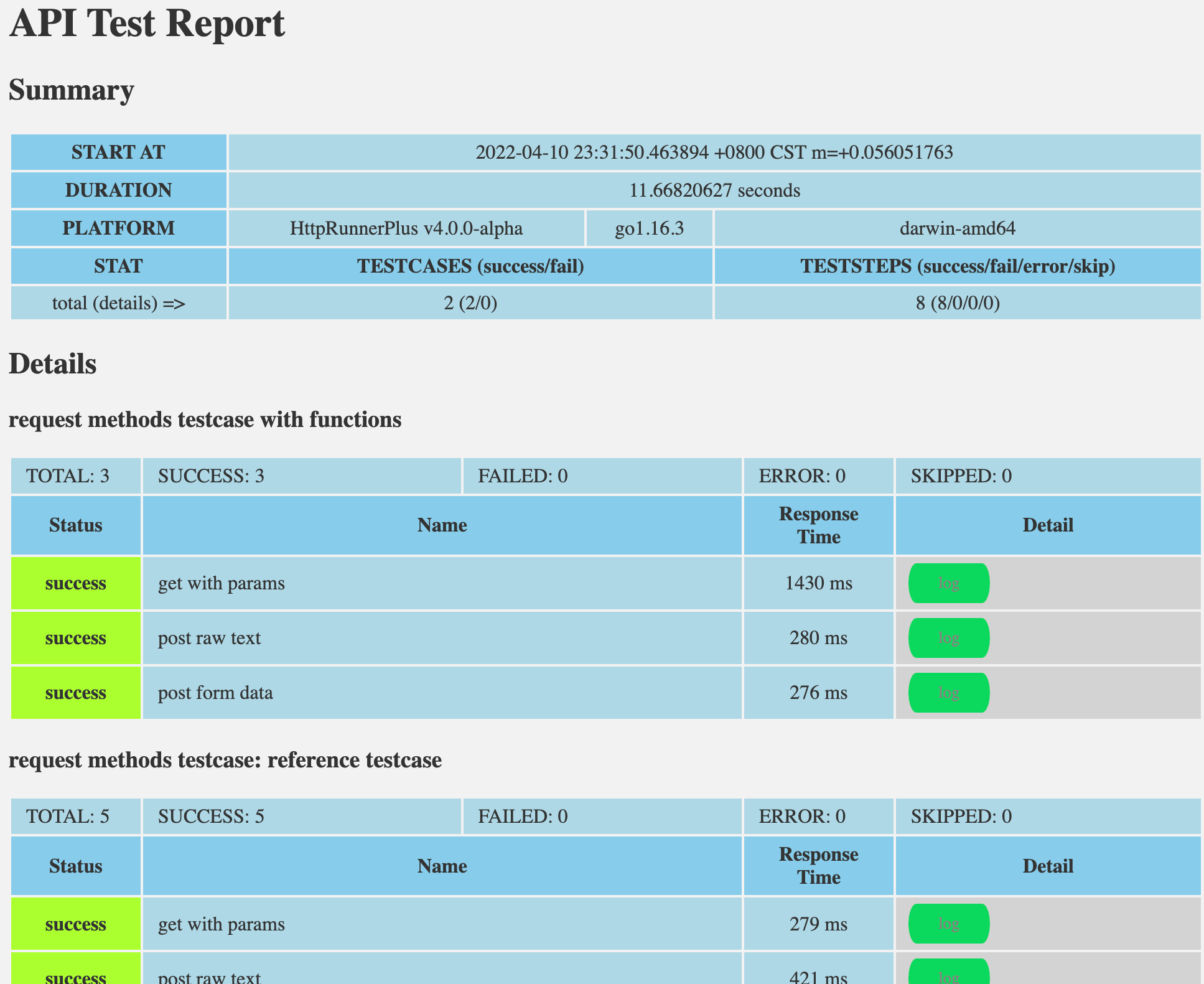

$ hrp run demo/testcases/demo_requests.yml demo/testcases/demo_ref_testcase.yml --gen-html-reportThe HTML report generated by the test looks like this:

Operational performance test

For existing interface test cases, HttpRunner can run the performance test through the hrp boom command without any extra work. The number of concurrent users can be specified with the –spawn-count parameter, and the start pressure slope can be specified with the –spawn-rate parameter.

$ hrp boom testcases/demo_requests.yml --spawn-count 100 --spawn-rate 10During the operation of the pressure measurement, the disposable energy summary data is printed every 3 seconds; When the test is terminated by pressing CTRL + C, a statistical Summary of the entire pressure measurement process is printed.

—END—

Open source protocol: Apache2.0