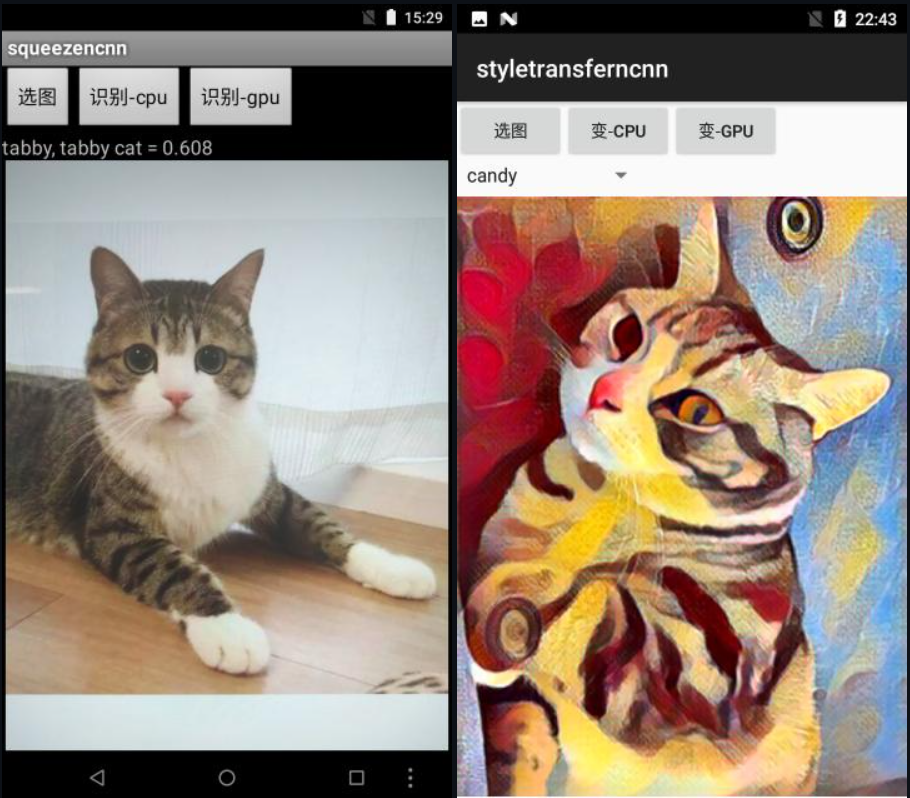

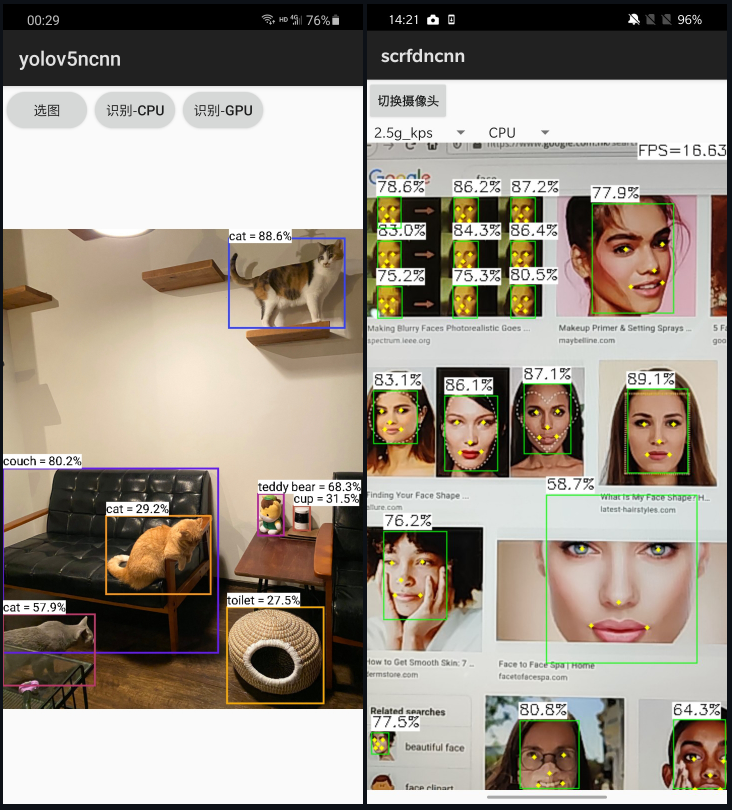

ncnn is a high-performance neural network forward computing framework optimized for mobile phones. From the beginning of design, ncnn has deeply considered the deployment and use of mobile phone terminals, no third-party dependence, cross-platform, mobile phone cpu speed is faster than all known open source frameworks.

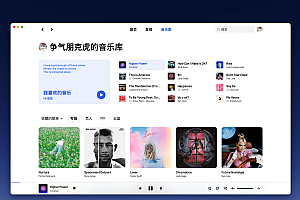

Based on ncnn, developers can easily transplant deep learning algorithms to mobile phones for efficient execution, and develop artificial intelligence apps, which have been used in many Tencent applications, such as QQ, Qzone, wechat, Tiantian Ptu, etc.

ncnn Features Overview:

Support convolutional neural network, support multi-input and multi-branch structure, can calculate some branches

No third-party library dependencies, no BLAS/NNPACK and other computing frameworks

Pure C++ implementation, cross-platform, support android ios, etc

ARM NEON assembler level conscience optimization, extremely fast computing speed

Fine memory management and data structure design, very low memory consumption

Support multi-core parallel computing acceleration, ARM big.LITTLE cpu scheduling optimization

Supports GPU acceleration based on the new low cost vulkan api

The overall library volume is less than 700K and can be easily reduced to less than 300K

Extensible model design, quantitative and half precision floating point support 8 bit storage, can be introduced into caffe/pytorch/mxnet/onnx/darknet/keras/tensorflow (mlir) model

Supports loading network models with direct memory zero copy references

Custom layer implementations can be registered and extended

Platforms supported by nccn:

= Running fast = Speed may not be fast enough = not confirmed / = Not applied

give a typical example

You can read more on your own。