This issue is about the content of big data, want to learn big data students welfare is coming!

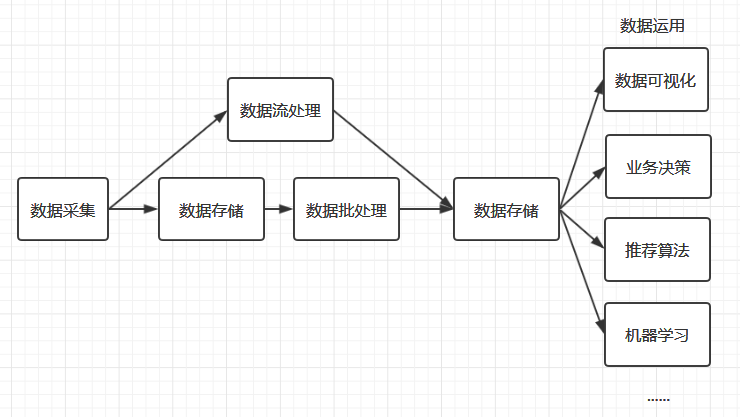

Big data processing process

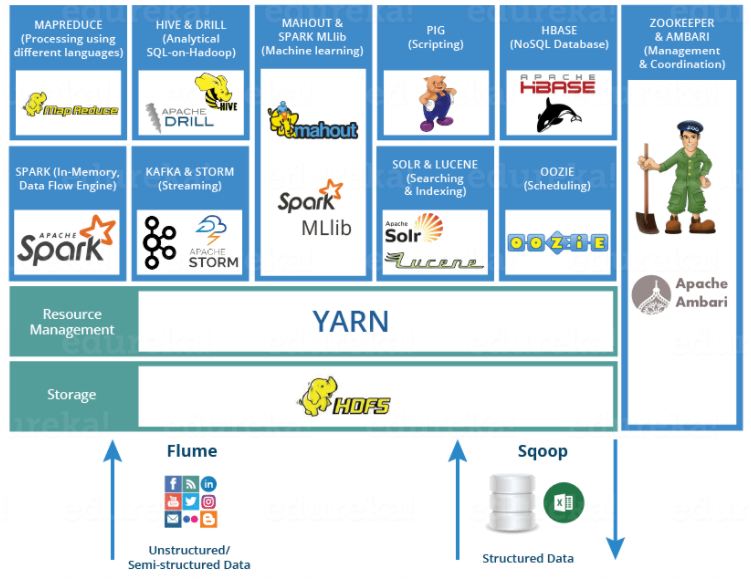

Learning framework

Log collection frameworks: Flume, Logstash, and Filebeat

Distributed file storage system: Hadoop HDFS

Database systems: Mongodb and HBase

Distributed computing framework:

- Batch processing framework:Hadoop MapReduce

- Stream processing framework:Storm

- Hybrid processing framework:Spark、Flink

Query analysis framework:Hive 、Spark SQL 、Flink SQL、 Pig、Phoenix

Cluster resource Manager:Hadoop YARN

Distributed coordination service:Zookeeper

Data migration tool:Sqoop

Task scheduling framework:Azkaban、Oozie

Cluster deployment and monitoring:Ambari、Cloudera Manager

data collection:

The first step in big data processing is the collection of data. Nowadays, medium and large projects usually adopt microservice architecture for distributed deployment, so data collection needs to be carried out on multiple servers, and the collection process should not affect normal business development. Based on this requirement, a variety of log collection tools have been derived, such as Flume, Logstash, Kibana, etc., which can complete complex data collection and data aggregation through simple configuration.

data storage

Once the data is collected, the next question is: How should the data be stored? The most familiar are traditional relational databases such as MySQL and Oracle, which have the advantage of being able to store structured data quickly and support random access. However, the data structure of big data is usually semi-structured (such as log data) or even unstructured (such as video and audio data). In order to solve the storage of massive semi-structured and unstructured data, Hadoop HDFS, KFS, GFS and other distributed file systems have been derived. They are all capable of supporting the storage of structured, semi-structured and unstructured data and can be scaled out by adding machines.

Distributed file system perfectly solves the problem of mass data storage, but a good data storage system needs to consider both data storage and access problems, for example, you want to be able to randomly access the data, which is the traditional relational database is good at, but not the distributed file system is good at. Then is there a storage scheme that can combine the advantages of distributed file system and relational database at the same time? Based on this demand, HBase and MongoDB are generated.

data analysis

The most important part of big data processing is data analysis, which is usually divided into two types: batch processing and stream processing.

- Batch processing: Unified processing of massive offline data in a period of time. The corresponding processing frameworks include Hadoop MapReduce, Spark, Flink, etc.

- Stream processing: processing the data in motion, that is, processing it at the same time as receiving the data, the corresponding processing frameworks include Storm, Spark Streaming, Flink Streaming, etc.

Batch processing and stream processing each have their own applicable scenarios. Because time is not sensitive or hardware resources are limited, batch processing can be used. Time sensitivity and high timeliness requirements can be used stream processing. As the price of server hardware gets lower and the demand for timeliness gets higher and higher, stream processing is becoming more and more common, such as stock price forecasting and e-commerce operation data analysis.

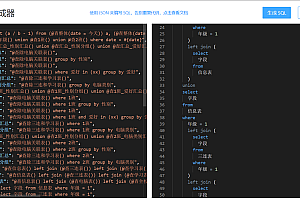

The above framework requires data analysis through programming, so if you are not a background engineer, is it not able to carry out data analysis? Of course not, big data is a very complete ecosystem, there is a demand for solutions. In order to enable people familiar with SQL to analyze data, query analysis frameworks emerged, commonly used Hive, Spark SQL, Flink SQL, Pig, Phoenix and so on. These frameworks allow for flexible query analysis of data using standard SQL or SQL-like syntax. These SQL files are parsed and optimized and converted into job programs. For example, Hive converts SQL to MapReduce jobs. Spark SQL converts SQL to a series of RDDs and transformation relationships. Phoenix converts SQL queries into one or more HBase scans.

data application

After the data analysis is complete, the next step is the scope of the data application, depending on your actual business needs. For example, you can visualize the data, or use the data to optimize your recommendation algorithm, which is now widely used, such as short video personalized recommendation, e-commerce product recommendation, and headline news recommendation. Of course, you can also use the data to train your machine learning model, which is the domain of other fields, with corresponding frameworks and technology stacks to deal with, so I won’t go into the details here.

Picture reference:

https://www.edureka.co/blog/hadoop-ecosystem

GITHUB site:click to download