The Spiderflow recommended in this issue can be a platform for writing crawlers in the way of flow charts, and can quickly complete a simple crawler without writing code.

Project characteristics

-

- Support css selectors, RE extraction

- Support JSON/XML format

- Support Xpath/JsonPath extraction

< li data – track = “6” > support multiple data sources, SQL select/insert/update/delete < / li >

- Support to crawl JS dynamically rendered pages

- Support agent

- Support binary format

- Support to save/read files (csv, xls, jpg, etc.)

- Common string, date, file, encryption and decryption, random and other functions

- Support process nesting

- Support plug-in extensions (custom actuators, custom functions, custom controllers, type extensions, etc.)

- Support HTTP interface

Installation and deployment

Prepare the environment

JDK > = 1.8

Mysql > = 5.7

Maven > = 3.0 download address: (http://maven.apache.org/download.cgi) < / span > < / code > < / pre >Run item

-

- < li data – track = “19” > cloud to code download page (https://gitee.com/ssssssss-team/spider-flow) to download into working directory < / li >

- Set Eclipse repository, menu Window-> Preferences-> Maven-> User Settings-> Browse behind the User Settings, then import the settings.xml file in the conf directory of your Maven directory, then Apply, click OK

-

- Import to Eclipse, menu file-> Import, then select Maven-> Existing Maven Projects, click Next> Button, select the working directory, and then click the Finish button to import successfully

-

- Import database, basic table: spider-flow/db/spiderflow.sql

< li data – track = “23” > open and run the org. Spiderflow. SpiderApplication. Java < / li >

-

- Open a browser and type (http://localhost:8088/)

< Import plug-in

-

- First download the required plug-ins locally and import them into your workspace or install them into your maven library

-

- Introducing plugins in spider-flow/spider-flow-web/pom.xml

< ! -- Take the introduction of mongodb plug-ins as an example-->

<dependency>

<groupId>org.spiderflow</groupId>

<artifactId>spider-flow-mongodb</artifactId>

</dependency>Quick Start

Crawl node

This object is used to request HTTP/HTTPS pages or interfaces

-

- Request method: GET, POST, PUT, DELETE and other methods

- URL: request address

- Delay time: in milliseconds, meaning a delay of some time before crawling is performed

- Timeout: The timeout time of a network request, also in milliseconds

- Proxy: The proxy set at the time of request, in the format of host:port, for example, 192.168.1.26:8888

- Encoding format: It is used to set the default encoding format of the page to UTF-8. When garble characters appear in the parsing, this value can be modified

- Follow redirection: The default is follow 30x redirection, you can uncheck

when this function is not needed.

- TLS certificate verification: This is checked by default. When an exception such as a certificate occurs, you can uncheck this attempt

- Automatic Cookie management: Automatically set cookies upon request (manually set by oneself and the cookies previously requested will be set in)

- Automatic deduplication: If this parameter is selected, the url will be deduplication. If the URL is repeated, the URL will be skipped.

- Number of retries: Retries when the request is abnormal or the status code is not 200

- Retry interval: the interval between retries in milliseconds

- Parameter: Used to set parameter Settings for GET, POST, and other methods

- Parameter name: parameter key

- Parameter value: Parameter value

- Parameter description: Only used to describe this parameter (equivalent to remarks/comments) has no practical significance

- Cookie: used to set the request Cookie

- Cookie name: Cookie key

- Cookie value: Cookie value

- Description: Only used to describe the Cookie (equivalent to remarks/comments) has no practical significance

- Header: sets the request header

- Header name: Header key

- Header value: Header value

- Description: Only used to describe the Header (equivalent to remarks/comments) has no practical significance

- Body: request type (default is none)

- form-data (Body item is set to form-data)

- Parameter name: Request parameter name

- Parameter value: Request parameter value

- Parameter type: text/file

- File name: file name required when uploading binary data

- raw (set the Body item to raw)

- < li data – track = “63” > the content-type: text/plain, application/json < / li >

- Content: Request body content (String type)

< Define variable Var

After the node is used to define variables, it can be used with expressions to achieve dynamic setting of parameters (such as dynamic request paging address)

-

- Variable name: the name of the variable, which overwrites the previous variable

when the variable name is repeated

- Variable value: The value of a variable, which can be either constant or expression

Output node

This node is mainly used for debugging, the output will be printed to the page during testing, and it can also be used to automatically save to the database or file

- Output to database: When checked, you need to fill in the data source, table name, and < font color=”blue”> Output item < /font> To correspond to the column name

- Output to CSV file: If selected, enter the CSV file path. font color=”blue”> Output item < /font> Will be used as the header

- Output all parameters: generally used for debugging, can output all variables to the interface

- Output item: name of the output item

- Output value: The output value, which can be a constant or an expression

Loop node

- Times or sets: When this item has a value (the value is a set or a number), subsequent nodes (including this node) will loop

- Loop variable: default to item, same meaning as item in for(Object item: collections)

- Loop subscript: When looped, a subscript (starting at 0) is generated and stored in the variable with this value, as for(int i =0; i < array.length; The i in I ++ has the same meaning

- Start position: loop from this position (starting from 0)

- End position: End to this position (-1 is the last item,-2 is the second-to-last item, and so on)

Execute SQL

Mainly used to interact with the database (query/modify/insert/delete, etc.)

-

- data source: need to select the configured data source

< li data – track = “87” > statement type: select/selectInt/selectOne/insert/insertofPk/update/delete < / li >

- SQL: To execute the SQL statement, the parameters that need to be injected dynamically are wrapped with ## such as: #${item[index].id}#

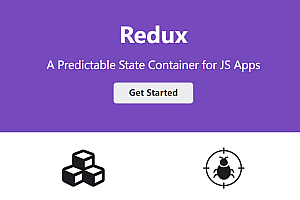

Process execution

Process Instance 1

It is easy to see that the process execution process is: A-> B-> C-> D, but since node A is A loop, assuming that the number of loops of node A is 3, then the execution process will become A,A,A-> B,B,B-> C,C,C-> D,D,D ( Three A’s are executed together, but the order is not fixed, each execution will flow directly to the next node, instead of waiting for all three A’s to end ), when D,D,D are executed, because no flow to the next node, then the whole process ends.

Since loops can also be set in nodes B,C and D, assuming that loops are also set in node C, the number of loops is 2 times, then the execution process of the whole process is A,A,A-> B,B,B-> C,C,C,C,C,C-> D,D,D,D,D,D(i.e., forming a nested loop )

Process Instance 2

- Running sequence: A-> B-> A,C-> B-> C

- Execute node A

- When node A is executed, execute node B

- When node B is executed, execute node A and C

- Execute A twice, B twice, and C twice in total.

This will form the recursion , which is A< -> B, but in this case, often need to plus conditions to limit , that is, the number of pages in the figure above < 3

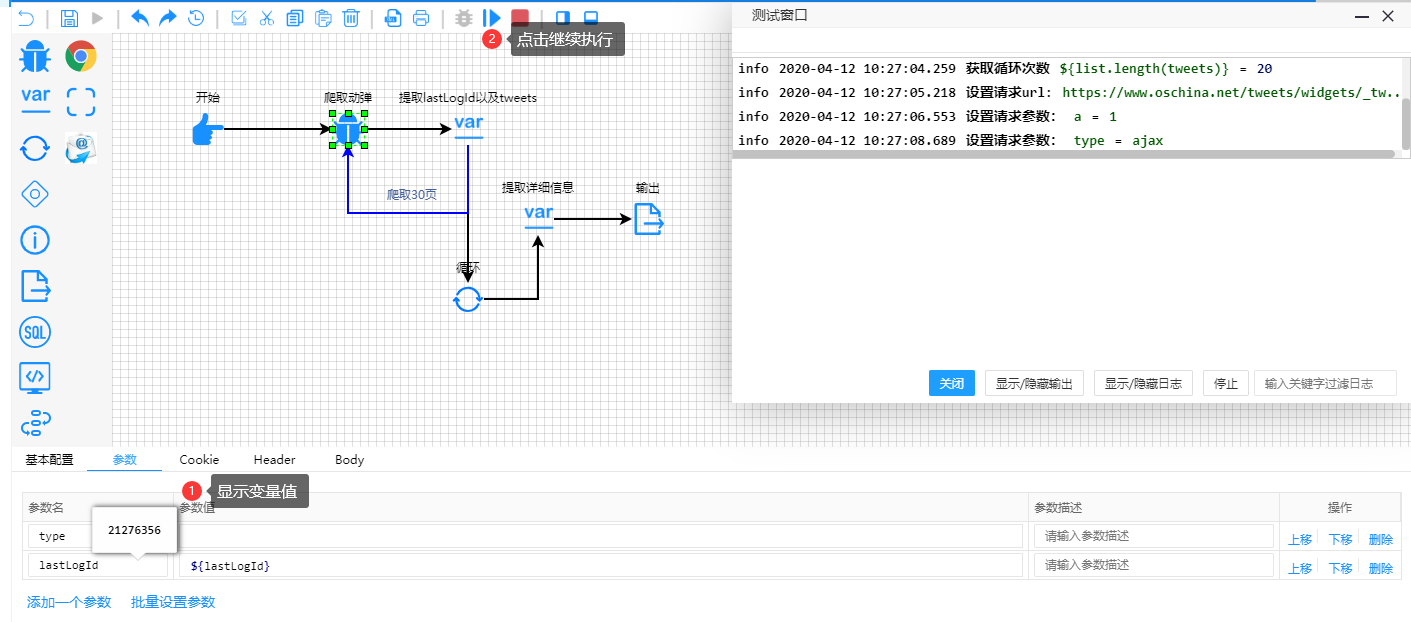

Project part screenshot

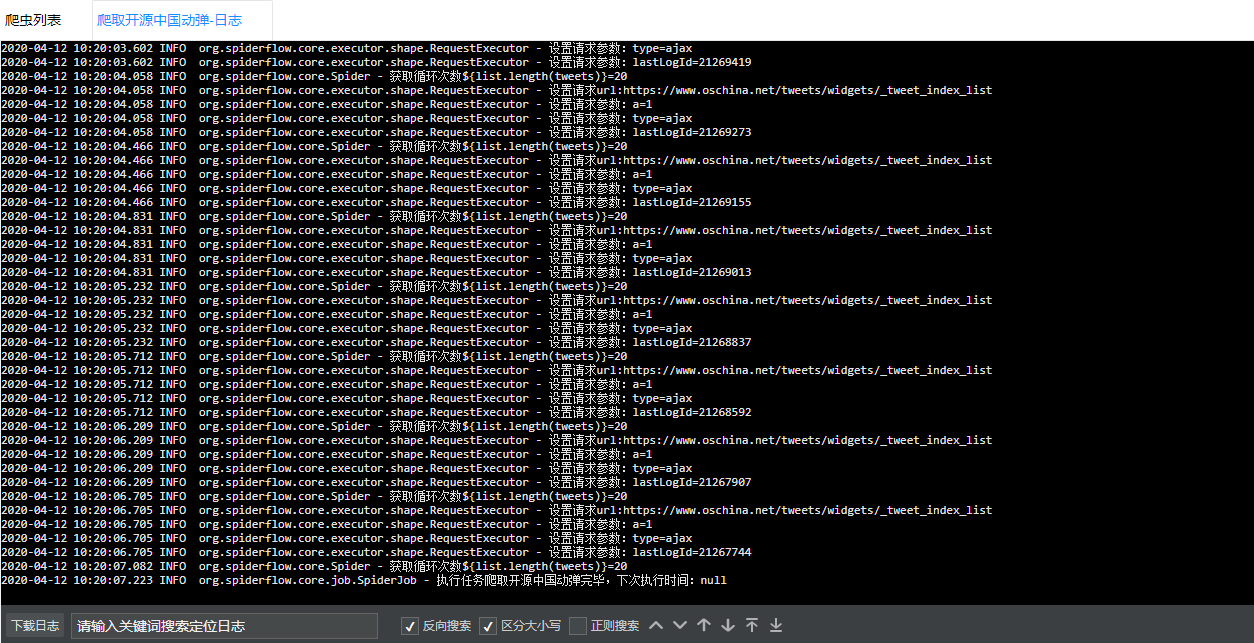

Crawler test

debug